30+ Tips: A Practical Guide to Solving LLM Context

Manually + The Automated Solution. A practical guide to mastering LLM context

Ever watched your powerful, all-knowing LLM (Gemini 3) suddenly get “dumb”?

You ask it about a new coding library, and it confidently hallucinates a function that’s a year out of date.

Why does this happen?

Old Knowledge: An AI’s “brain” is frozen in time. Its training data has a hard cutoff date, so it knows nothing about new APIs, libraries, or events that happened after it was trained.

“Context Rot”: You try to fix this by pasting in 50 pages of documentation. Suddenly, the AI can’t follow simple instructions. You’ve “slammed” its context window with too many tokens. This “context rot” degrades its reasoning, and it loses track of what matters.

The secret to working with LLMs isn’t just what you ask; it’s about giving them just the right information for just the right specific task.

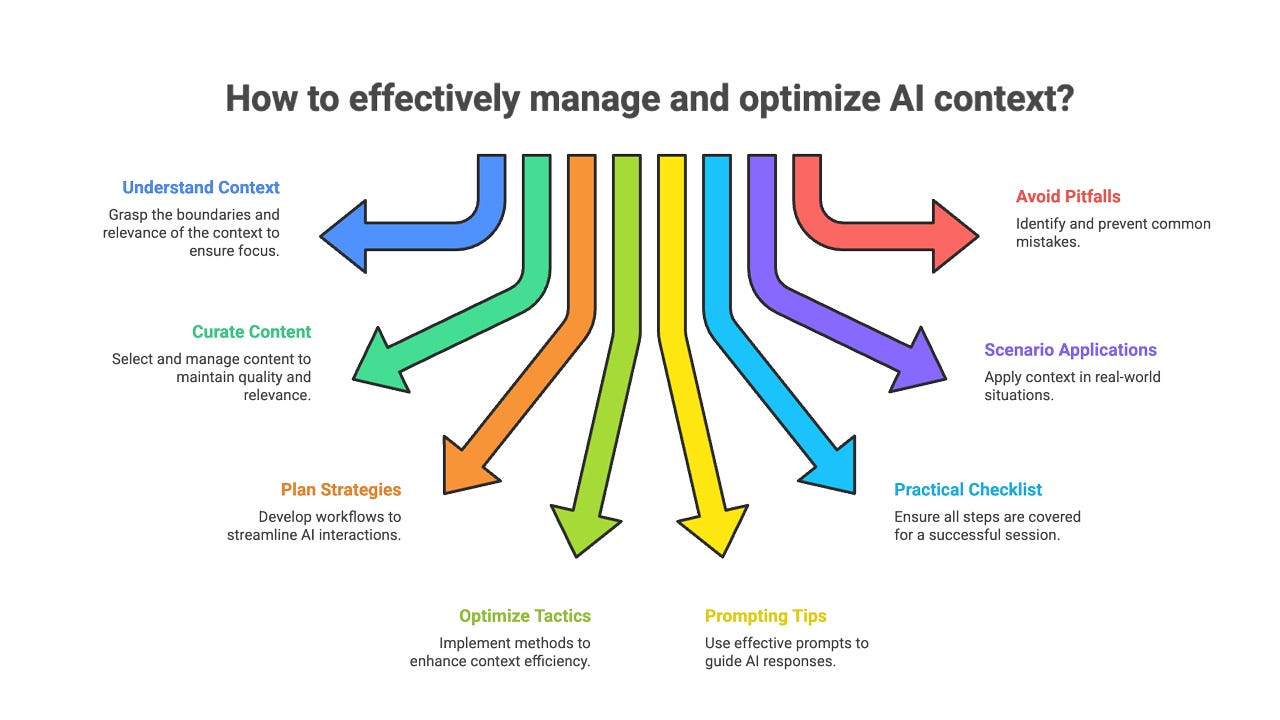

Below you’ll find 30+ actionable tips & tricks, grouped by category, each with context (why it matters) and what to do. These tips cover the manual way to manage context. After that, a new section explains the automated solution for solving this problem for good.