How AI Agents Learn from Ants

Collaborative Autonomy Through Collective Strategies

Ants, Humans, and the Geometry of Collective Problem-Solving

“While ants perform more efficiently in larger groups, the opposite is true for humans.”

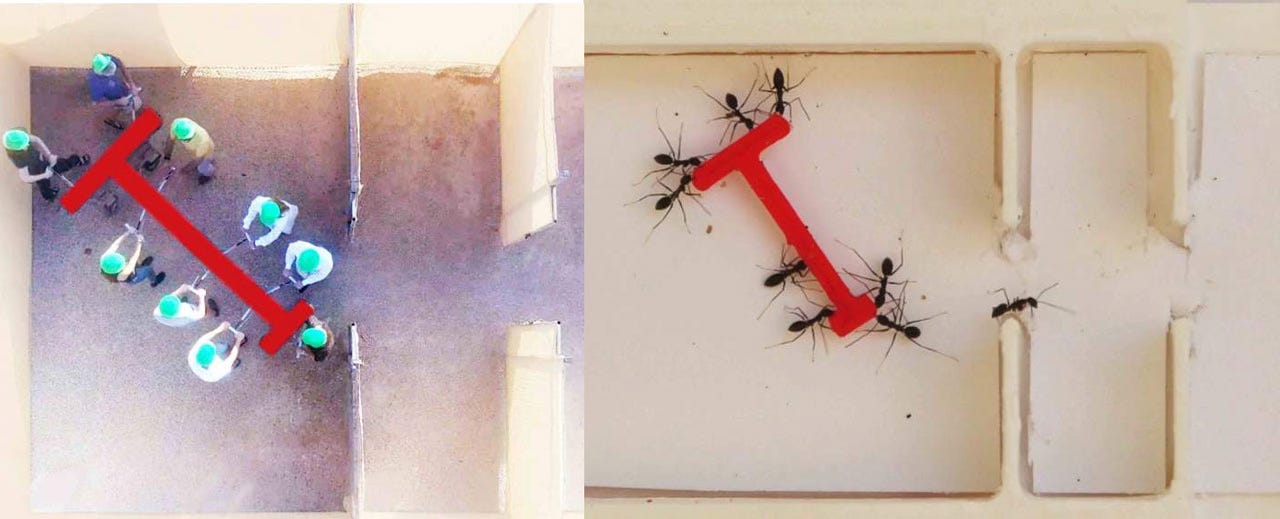

In both biology and machine learning, creativity can emerge in fascinating ways. Simple agents can combine to tackle problems larger than themselves. A recent study researchers introduced a scalable "piano movers' puzzle." It revealed how collective intelligence and communication limits affect group performance.

From a machine learning (ML) view, we might see each solver, an ant colony or a human team, as an algorithmic entity that navigates a combinatorial maze. This text explores these findings. It reinterprets them using a machine learning framework.

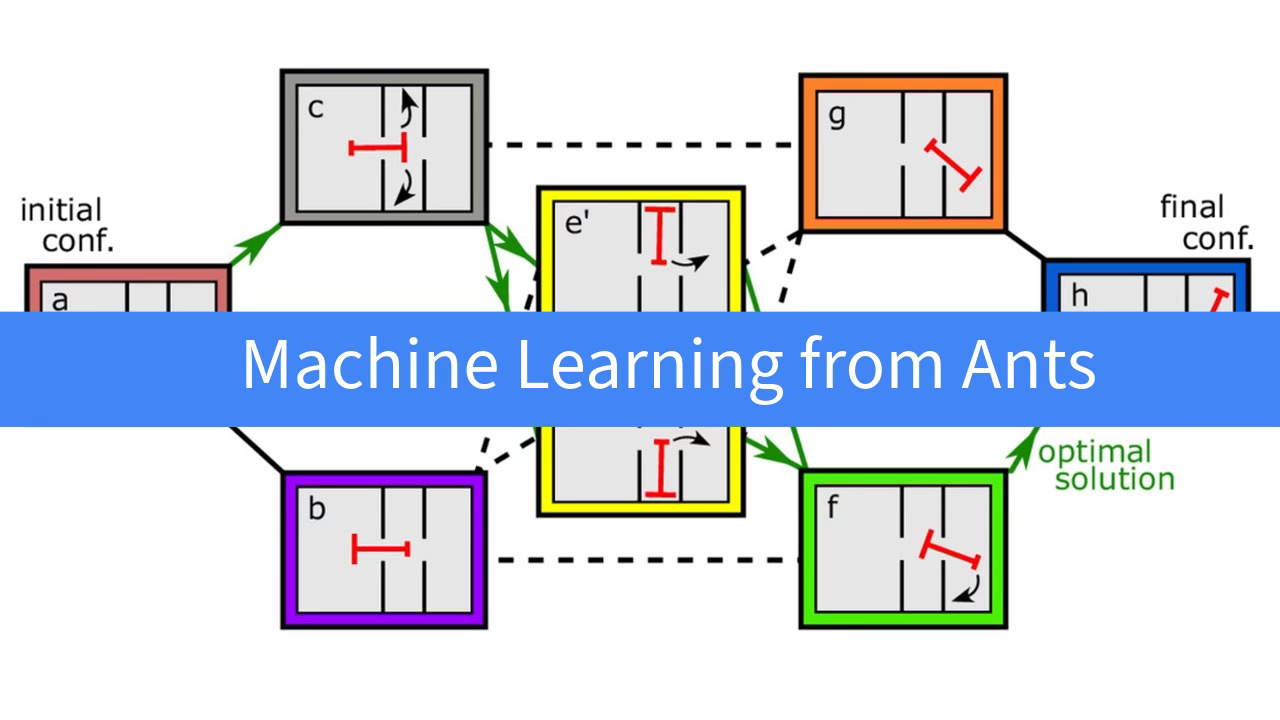

The Puzzle as a Search Space

In the experiments, both ants and humans had to maneuver a T-shaped load from one side of a partitioned arena to the other. Think of this environment as a search space the same concept used in ML to describe all possible model configurations or paths toward a solution:

Configuration Space: A continuous three-dimensional manifold (x,y,θ) in which the load’s location and orientation evolve over time.

Discretized Graph Representation: When humans attempt to “mentally reduce” the problem, they effectively build a node-and-edge graph, where each node is a “state” (e.g., which chamber the load currently occupies), and edges represent valid (or invalid) transitions.

In ML, we often discretize or map complex continuous spaces (like images, text, or puzzle states) into more manageable representations. This puzzle nicely mirrors how an ML algorithm partitions input spaces and navigates them step by step.

Ants as a Model of Emergent Cohesion

Collective “Memory” in the Order Parameter

A central finding of the study is that large groups of ants persistently move the load in one direction even upon hitting barriers. This leads to:

Wall-sliding behavior and

Short-term emergent memory encoded in the ants’ “collective ordered state.”

From an ML standpoint, ants’ behavior recalls ensemble methods, like random forests or swarm-based optimization algorithms, that rely on multiple agents each with limited individual intelligence. Despite each ant’s local rules, the entire colony “learns” to exploit wall-sliding as a heuristic reminiscent of a graph-search algorithm sticking to one boundary to find an exit. The “collective memory” emerges because, once in motion, ants continue pulling in the same direction, effectively storing directionality in their physical alignment.

Scaling Performance with Group Size

In machine learning, we often see improved performance when increasing the size of a collaborative ensemble (up to a point). The same occurred with the ants:

Single-ant attempts resembled random walks with frequent stopping.

Small group attempts showed modest improvements.

Large group attempts drastically outperformed the others, thanks to strongly aligned pulling efforts that overcame direction changes.

This parallels how scaling model ensembles, or indeed large language models, can yield better generalization. The ant colony’s “emergent memory” is akin to synergy in an ensemble that collectively “remembers” where it came from.

Humans as Cognitive Yet Conflicted Agents

Depth-First Search and Greedy Decisions

Individual humans, in contrast, leveraged sophisticated cognitive maps of the puzzle:

Depth-First Search (DFS)-like strategies: They systematically tested potential paths, “pruning” edges that led to dead ends.

Long-Term Memory: Once an edge was found invalid, it was rarely tested again.

However, group attempts in humans introduced the necessity for consensus, an interplay between personal reasoning and social dynamics. When communication was restricted, humans defaulted to a “greedy” choice, pulling toward the path that superficially seemed most direct. This situation resonates with coordination failures seen in multi-agent ML scenarios, where local policies converge on suboptimal solutions if signals are limited.

Communication Constraints: When More Isn’t Always Better

When large groups of humans could communicate freely, they deliberated:

Slower to start, but

Better alignment on a less obvious (yet superior) path.

By contrast, large groups with restricted communication swiftly defaulted to a single motion without deliberation, a quick consensus that often led to mistakes. In machine learning art terms, one might imagine each human “node” as a recurrent neural network unit. Without adequate “links” (communication channels), each unit fails to share hidden states effectively. The result is a neat but often suboptimal “vote” for the common denominator.

Lessons for Machine Learning and Swarm Robotics

Local Rules, Global Intelligence: Ants show how powerful collective solutions can arise from simple rules. This is mirrored in swarm intelligence algorithms (e.g., ant colony optimization), which harness local interactions to solve global optimization problems.

Communication Structures: Humans reveal that even intelligent agents can degrade in performance if communication is restricted. In ML or multi-robot systems, the design of the communication protocol is critical to maintain synergy.

Scaling and Diversity: There exists a trade-off between the benefits of scaling a group (ants do better in larger groups) and the need for complex consensus (humans sometimes do worse in larger, communication-limited groups). In ML ensembles, adding more models typically increases performance, unless they cannot effectively share or aggregate their diverse insights.

Heuristics vs. Direct Knowledge: Ants used a boundary-following heuristic, while humans used more explicit mental models. In ML, we see similarly that heuristics (like gradient-based or local search) can be powerful, but so can global knowledge (like deeper layers in neural nets). The design trade-offs depend on the environment and the cost of communication.

Ant Colony Optimisation (ACO)

Machine learning uses this principle to guide simulated "ant" agents through solution spaces, amplifying the most promising paths with digital "pheromones". Paths with stronger signals become attractive options, steering the collective algorithm towards near-optimal solutions.

This approach powers a broad range of applications:

Scheduling: In steel plants, ACO quickly proposes production schedules that balance constraints and reduce costs.

Routing: Ant-inspired methods find efficient paths in logistics and transportation, minimizing travel time and fuel usage.

Traveling Salesperson Problem: ACO provides powerful heuristics for discovering short routes among many cities, clarifying how local decisions can merge into globally efficient outcomes.

The Aesthetics of Collective Intelligence: Toward a Machine Learning Art

Viewing these findings from a creative, artistic lens reminds us that machine learning is as much about emergent patterns and collective beauty as it is about solving tasks:

Ant Behavior as Generative Art: The random-yet-cohesive swirling of ant trajectories against a puzzle boundary can be seen as spontaneous generative art, reminiscent of fractal-like patterns in swarm paintings.

Human Consensus as Performance: The slow, deliberate gatherings or “huddles” before a group of humans decides on a route mirror choreographed performances, each “dancer” influencing the load’s orientation.

Such observations hint at new forms of ML-inspired art. In it, multi-agent systems generate aesthetic movements or patterns. The puzzle, and each species' solution path, becomes a basis. It leads to new shapes and symbolic behaviors.

“Simple minds can easily enjoy scalability while complex brains require extensive communication to cooperate efficiently.”

MLearning Reflections

By reframing this ant-and-human puzzle as a machine learning narrative, we glean insight into how:

Simple, local, homogeneous rules can forge robust solutions at scale.

Rich, heterogeneous cognition can excel at smaller scales but requires extensive communication to scale effectively without losing nuanced knowledge.

There is a delicate balance between structure and fluidity. Structure is individual cognition and heuristics. Fluidity is collective alignment and emergent memory. It is like how creativity unfolds in large, collaborative ML systems.

Ultimately, the “piano movers’” puzzle becomes a vivid metaphor for the complexities and beauties of intelligence, be it biological, human, or algorithmic.

Whether you are a single solver or a swarm of carriers, success lies in the artful balance of knowledge, communication, and persistence.

And as with any good machine learning system, the true “art” often emerges from the interplay between many individual parts working, and learning together.

Comparing cooperative geometric puzzle solving in ants versus humans

This study redefines what I thought about group problem-solving.

This article really struck a nerve for me. It's not just the science stuff, but also how it shows that working together can turn problems into opportunities. It's given me a whole new perspective on how I should be interacting with people.